AI Inference Modules Companies - NVIDIA Corporation (US) and Advanced Micro Devices, Inc. (US) are the Key Players

The global AI inference market is expected to be valued at USD 106.15 billion in 2025 and is projected to reach USD 254.98 billion by 2030 and grow at a CAGR of 19.2% from 2025 to 2030. The growth of the AI inference market is significantly propelled by advancements in AI inference chips and specialized AI inference hardware, which are designed to handle the computational demands of real-time data processing efficiently. As industries increasingly deploy AI models for applications like autonomous driving, healthcare diagnostics, and smart assistants, the need for high-performance AI inference chips has surged, enabling faster and more energy-efficient processing at the edge and in data centers. NVIDIA Corporation (US), Advanced Micro Devices, Inc. (US), Intel Corporation (US), SK HYNIX INC. (South Korea), and SAMSUNG (South Korea) are the major players in the AI inference market. Market participants have become more varied with their offerings, expanding their global reach through strategic growth approaches like launching new products, collaborations, establishing alliances, and forging partnerships.

For instance, in October 2024, Advanced Micro Devices, Inc. (US) launched 5th Gen AMD EPYC processors for AI, cloud, and enterprise. It offers maximized GPU acceleration, per-server performance, and AI inference performance. AMD EPYC 9005 processors provide density and performance for cloud workloads.

In October 2024, Intel Corporation (US) and Inflection AI (US) collaborated to accelerate AI adoption for enterprises and developers by launching Inflection for Enterprise, an enterprise-grade AI system. Powered by Intel Gaudi and Intel Tiber AI Cloud, this system delivers customizable, scalable AI capabilities, enabling companies to deploy AI co-workers trained on their unique data and policies..

To know about the assumptions considered for the study download the pdf brochure

Major AI Inference companies include:

- NVIDIA Corporation (US)

- Advanced Micro Devices, Inc. (US)

- Intel Corporation (US)

- SK HYNIX INC. (South Korea)

- SAMSUNG (South Korea)

- Micron Technology, Inc. (US)

- Apple Inc. (US)

- Qualcomm Technologies, Inc. (US)

- Huawei Technologies Co., Ltd. (China)

- Google (US)

- Amazon Web Services, Inc. (US)

- Tesla (US)

- Microsoft (US)

- Meta (US)

- T-Head (China)

- Graphcore (UK)

- Cerebras (US)

NVIDIA Corporation.:

NVIDIA Corporation (US) is a leading multinational technology company that specializes in designing and manufacturing graphics processing units (GPUs), system-on-chips (SoCs), and artificial intelligence (AI) infrastructure products. The company is known for revolutionizing the gaming, data center, Al, and professional visualization markets through its cutting-edge GPU technology. Its AI and deep learning platforms, driven by high-performance AI inference chips and AI inference hardware, are widely recognized as leading enablers of AI computing and ML applications. NVIDIA Corporation is a leader in AI inference with a complete stack of AI inference hardware, software, and services. It operates business through two reportable segments: Compute & Networking and Graphics. NVIDIA offers CPUs and GPUs for servers, PCs, and gaming under the Compute & Networking segment. It provides accelerated computing platforms and networking solutions, including the NVIDIA Data Center platform, designed to handle compute-intensive workloads like AI, data analytics, and scientific computing. NVIDIA networking products cover InfiniBand and Ethernet solutions that provide high-performance networking necessary for AI and HPC workloads that spans thousands of compute nodes. It also provides GPU acceleration in autonomous machines, laptops, desktops, supercomputers, and data centers. It invests heavily on on research and development focused on advancing in GPU architecture, AI, and ML technologies.

Advanced Micro Devices, Inc.:

Advanced Micro Devices, Inc. (US) is a provider of semiconductor solutions that designs and integrates technology for graphics and computing. The company offers many products, including accelerated processing units, processors, graphics, and system-on-chips. It operates through four reportable segments: Data Center, Gaming, Client, and Embedded. The portfolio of the Data Center segment includes server CPUs, FPGAS, DPUS, GPUs, and Adaptive SoC products for data centers. The company offers AI inference hardware products under the Data Center segment. The Client segment comprises chipsets, CPUs, and APUs for desktop and notebook personal computers. The Gaming segment deals with discrete GPUs, semi-custom SoC products, and development services for entertainment platforms and computing devices. The scope of the Embedded segment includes embedded FPGAS, GPUs, CPUs, APUs, and Adaptive SoC products. Advanced Micro Devices, Inc. (US) caters to various applications, including automotive, defense, industrial, networking, data center and computing, consumer electronics, networking & telecommunications, and healthcare. The company offers a range of chips optimized for AI and ML workloads.

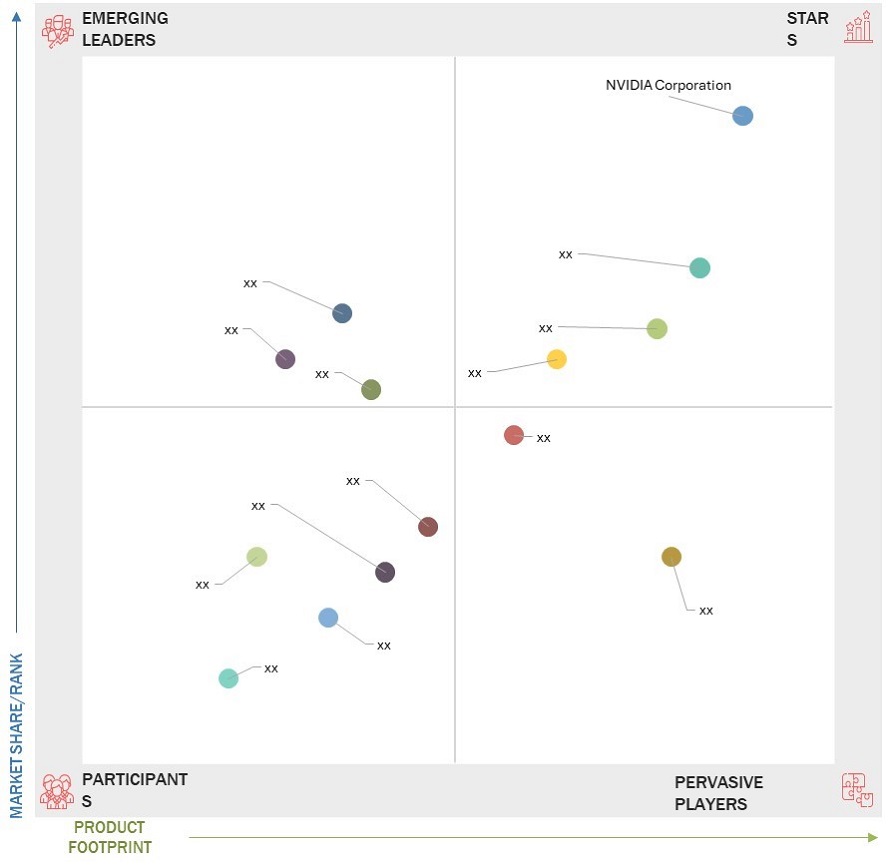

Company Evaluation Matrix: Key Players, 2024

The company evaluation matrix provides information on key players in the AI inference market based on two primary categories: strength of the product portfolio and business strategy excellence. Companies in the AI inference market are evaluated in consideration of elements like geographic reach, success of development projects, and industry trend alignment. The part on the strength of the product portfolio emphasizes how different firms approach creating and introducing AI inference products. The strength of a player's portfolio depends on factors including creativity, technological developments, and the variety of AI inference solutions. This matrix breaks businesses into four categories: Stars, Emerging Leaders, Participants, and Pervasive Players, therefore offering a disciplined study of competitive standing.

Market Ranking

The AI inference market is consolidated, with top three players contributing nearly 80-82% share. NVIDIA Corporation (US) holds the market rank 1 position in the AI inference market due to its pioneering advancements in GPU technology and its comprehensive ecosystem of AI inference hardware and software, which are optimized for high-performance, real-time AI workloads. Advanced Micro Devices, Inc. (US) secures the market rank 2, leveraging its EPYC CPUs and Instinct GPUs to deliver competitive AI inference solutions, particularly in high-performance computing and data center environments. Intel Corporation (US), at market rank 3, while traditionally known for CPUs, has made significant strides in AI inference through its Habana Labs Gaudi processors, as well as its Xeon Scalable processors, which are optimized for AI workloads. However, Intel’s slower transition to specialized AI inference hardware compared to NVIDIA and AMD has placed it slightly behind in the race. Together, these companies drive innovation in the AI inference market, with NVIDIA’s early-mover advantage and ecosystem integration giving it a clear edge over its competitors.

Related Reports:

AI Inference Market by Compute (GPU, CPU, FPGA), Memory (DDR, HBM), Network (NIC/Network Adapters, Interconnect), Deployment (On-premises, Cloud, Edge), Application (Generative AI, Machine Learning, NLP, Computer Vision) - Global Forecast to 2030

Contact:

Mr. Rohan Salgarkar

MarketsandMarkets™ INC.

630 Dundee Road

Suite 430

Northbrook, IL 60062

USA : 1-888-600-6441

[email protected]

This FREE sample includes market data points, ranging from trend analyses to market estimates & forecasts. See for yourself.

SEND ME A FREE SAMPLE